I love diving into learning about new things and falling down research rabbit holes, but sometimes I just need a quick, efficient answer to a question or a concise guide to a task. If I’m trying to figure out how long to roast chicken or whether Pluto has been reinstated as a planet, I want a short list of bullet points and a simple yes or no.

So, while ChatGPT‘s Deep Research feature has proven to be an amazing researcher that is great when I want to immerse myself in a topic, I haven’t made it my default tool with the AI chatbot. The AI model’s database, as well as its search tool, resolve pretty much any day-to-day question or issue I might ask it. I don’t need a formal report on how to make a meal that takes 10 minutes to compile. But, I do find the comprehensive answers from Deep Research viscerally appealing, so I decided it was worth comparing it to the standard (GPT-4o) ChatGPT model and giving it a few prompts that I could imagine submitting on a whim or with little long-term need.

Beef Wellington

For the first test, I wanted to see how both models would handle a classic, somewhat intimidating recipe: Beef Wellington. This isn’t the kind of dish you can just throw together on a weeknight. It’s a time-consuming, multi-step process that requires patience and precision. If there was ever a meal where Deep Research might prove useful, this was it. I asked both models: “Can you give me a simple recipe for kosher Beef Wellington?”

Regular ChatGPT responded almost instantly with a straightforward, well-structured recipe. It listed ingredients in clear measurements, broke the process down into manageable steps, and offered a few helpful tips to avoid common pitfalls. It was exactly what I needed in a recipe. Deep Research took a full ten minutes and had a very long, complex mini-cookbook centered on the dish. I had multiple versions of Beef Wellington, which did all adhere to my specific requests, but ranged from a Jamie Geller-inspired method to a 19th-century traditional preparation with some substitutions. That’s not counting the extra suggestions about decorations and an analysis of various types of puff pastry and their butter-to-flour ratios. If I’m honest, I loved it as a piece of trivia obsession. But, if I wanted to actually just make the dish, it was a bit too much like those recipe blogs where you have to scroll past someone’s entire life story just to get to the ingredients list.

TV time

For the second test, I wanted to see if Deep Research could help me buy a TV so I kept it simple with: “What should I consider when buying a new TV?”

Regular ChatGPT gave me a quick and clear answer. It broke things down into screen size, resolution, display type, smart features, and ports. It told me that 4K is standard, 8K is overkill, OLED has better contrast, HDMI 2.1 is great for gaming, and budget matters. I felt like I had a decent grasp of what to look for, and I could have easily walked into a store with that information.

Deep Research had its usual extra questions about what’s important to me, but it was faster this time, only six minutes before delivering a full report on several TVs. Except rather than a simple pros and cons list, I got a lot of unnecessary detail on things like OLED vs. QLED panels, the reason TV refresh rates affect video games, and the impact of compression algorithms on streaming quality. Again, this was all incredibly informative, but entirely unnecessary for my purposes. And unlike Beef Wellington, I’m not going to keep coming back to the TV buying guide on a semi-regular basis.

Telescope look

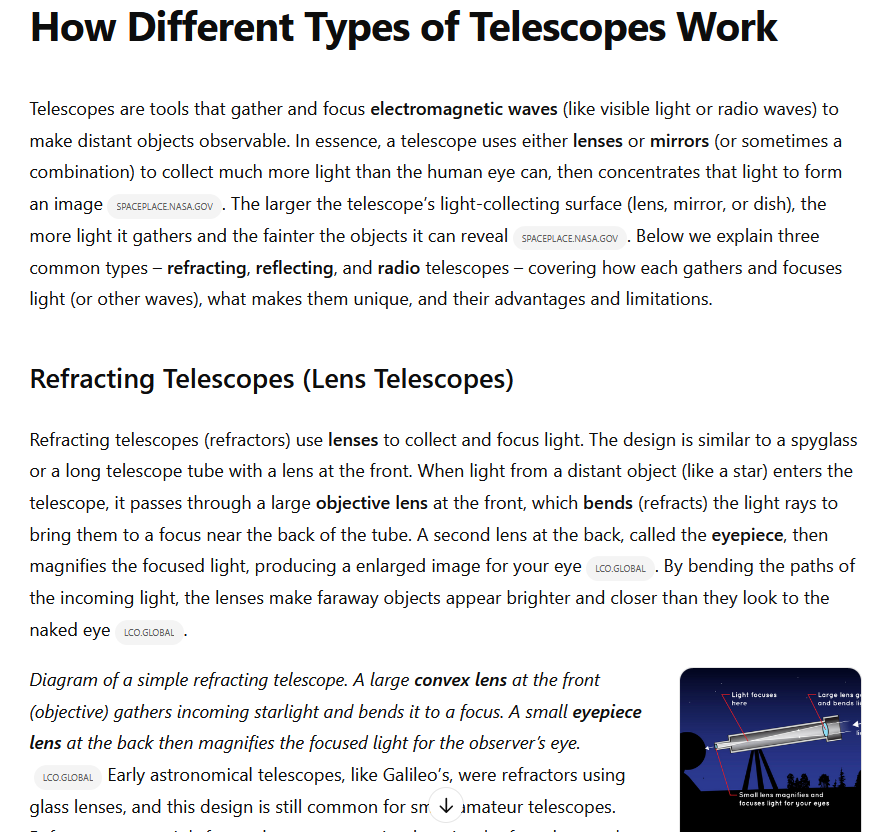

For the final test, I decided to get a little more academic in light of my recent decision to pursue astronomy more seriously as a hobby. Still keeping it brief, I asked, “How does a telescope work?”

Regular ChatGPT responded instantly with a simple, digestible answer. Telescopes gather and magnify light using either lenses (refracting telescopes) or mirrors (reflecting telescopes). It briefly touched on magnification, resolution, and light-gathering power, making it easy to understand without getting too technical.

Deep Research gave me a report of a kind I might have written in high school. After asking how technical I wanted my answer, and me responding that I didn’t want it to be technical, I waited about eight minutes for a lengthy discussion of optics, the development of different kinds of telescopes, including radio telescopes, and the mechanisms behind how they all work. The report even included a guide on buying your first telescope and a discussion on atmospheric distortion in ground-based observations. It was answering questions I hadn’t asked. Admittedly, I might do so at some point so the anticipation of follow-up queries wasn’t a huge negative in this instance. Still, a couple of sentences about mirrors would have been just fine in the moment.

Deep thoughts

After running these tests, my opinion of Deep Research as a powerful AI tool with impressive results remains, but I feel much more aware of its excesses in the context of regular ChatGPT use. The reports it generates are detailed, well-organized, and surprisingly well-written. For a random tour of interesting information, it’s pretty great, but I much more often just need an answer, not a thesis. Sometimes a shallow dip is preferable to a deep dive.

If the regular ChatGPT approach is accurate and does in seconds what takes Deep Research several minutes and a lot of unnecessary context to provide, that’s going to be my preference 99 times out of a hundred. Sometimes, less is more. That being said, Deep Research’s shopping advice would be great for a much bigger purchase than a TV, like a car, or even when looking for a house. But for everyday things, Deep Research is just doing too much. I don’t need a jet engine for an electric scooter, but, for a transcontinental flight, that jet engine is good to have on-hand.

You might also like